|

||

| . . . Chronicles . . . Topics . . . Repress . . . RSS . . . Loglist . . . | ||

|

|

|||||

| . . . Wittgenstein | |||||

| . . . 2001-10-06 |

Philosophers often behave like little children, who first scribble random lines on a piece of paper with their pencils, and then ask an adult "What is that?"

-- Ludwig Wittgenstein's "Big Typescript"

| . . . 2001-10-10 |

At the doctor's office yesterday (it's been a busy week here), I saw a poster with a dozen cute little cartoons of the Warning Signs of Diabetes. "Excessive Hunger" was a guy shoving a cake into his mouth, "Sexual Dysfunction" was an sad-faced man lying in bed with a sad-faced woman, and so on. But "Vaginal Infection" was a woman holding a sign in front of her torso that read "Vaginal Infection." That is to say that the warning sign of a vaginal infection is literally a "Vaginal Infection" warning sign.

Maybe this is only interesting if you're reading Wittgenstein....

| . . . 2001-10-14 |

The Indefinite Conversation

A reader either cheers me on or lumps me in, I can't tell which:

| what breed of idiots |

Which reminds me again how hard it is to keep that greased-piglet of a "we" out of political discourse, and how painful it is to recall that the nested affiliations "a couple of webloggers who read each other," "liberals who read anything," "the American voting public," and "global humanity" are not precisely interchangeable. For example, the first group in that list -- and possibly the second as well -- is easily outnumbered by the group of "people who decide how to vote based on TV commercials." (After which, to be sure, we all collectively enjoy the results.)

Colette Lise joins a particularly exclusive community of shared trauma:

| That Warning Signs of Diabetes thing is in my doctor's office, too. Creepy. Why are you reading Wittgenstein? |

| I have a toothache. |

Or at least everyone is moaning. And why else would they moan?

| But if here we talk of perversity, we might also assume that we all were perverse. For how are we, or B, ever to find out that he is perverse?

The idea is, that he finds out (and we do) when later on he learns how the word 'perverse' is used and then he remembers that he was that way all along. |

|

| - Ludwig Wittgenstein, "Notes for Lectures on 'Toothache' and 'Toothache'," Philosophical Occasions | |

| . . . 2001-10-18 |

The Indefinite Conversation, cont.

Lawrence L. White kindly points us to T. P. Uschanov's Icy Frigid Aire:

|

There's so much to like here. At the risk of revealing myself as an

exoticist, I confess I find the very idea of Finland intriguing -- European

culture on the tundra -- as well as his ethnic background, and his use of

the old English academic schtick of initials instead of names. Nice

theatrical touch. Who wouldn't like a grad student who calls himself a

"philosopher"? They're not that many professors with such moxy. He is also

a non-tedious example of how to use the web to express intellectual

interests. It's as if he invited us into his apartment, showed us the

views outside the window, what's on the bookshelf, the record player, his

desk. (Showing instead of telling us what he likes & doesn't like.) Google

searches for Mr. Uschanov's name come up w/even more. For a while, at

least, he seems to have been everywhere talking about everything. There

aren't many entries for 2001. Pages on the site have been updated

recently, so I don't think he's burned out. Perhaps he has had to buckle

down at school.

Although there is not much of his writing on the page, it's good stuff. I think his two Wittgenstein essays are first rate, & the music writing snippet is interesting, especially for not going on too long. |

Uschanov is indeed a online treat. On Usenet alone, he quotes Shangri-Las interviews, runs a Golden Oldies Lyrics Quiz, and supplies helpful reminders:

But philosophy isn't love of truth. In Greek,A guide to personal misuse of Uschanov's longer essays:

"agape" = 'love, affection'

but

"philia" = 'friendship, comity'

Which means a world of difference.

| . . . 2001-10-21 |

God told me to

| "All these arguments might look as if I wanted to argue for the freedom of the will or against it. But I don't want to." -- Ludwig Wittgenstein |

I wouldn't describe the syllogism

Much tidier then is T. P. Uschanov's:

In everyday life, the will exerts a level of influence that even the willer often finds embarrassingly feeble. (E.g., "I will now sit down and write that novel.") Sure, if you asked me why I took a computer programming job, I'd say "Because I decided that I wanted money more than I wanted to starve." But if you asked me why I walked into a lamp post, I could only answer, "Because I wasn't paying attention." If you went on to ask, "Why weren't you?" I might go on to answer "Because I was trying to remember all the verses to 'Duke of Earl'," but if you continued your interrogation, I'd quickly have to rest with "Hey! Lay off! I didn't do it on purpose!" The matter might then be dropped, but note that if I had been driving a car when I struck the lamp post, the justice system would hold me responsible despite my free will's lack of exercise.

On the deterministic side, if I lent a fiscally irresponsible friend $50 and he spent it all on a bottle of armagnac which he then dropped and shattered on the way home from the liquor store, I might tell myself, "Well, that was predictable." But that would just be an attempt to redirect some of my exasperation from my friend to myself; despite my claim to prescience I would not, in fact, declare any more surprise on hearing that he had instead left the money behind in his pants after impulsively joining a parade of nudists at the Folsom Street Fair.

I can't remember early polytheists or skeptics showing much interest in free will or determinism or their influence on the justice system. If a Greek god punished you by prodding you into a lousy decision, that wouldn't spring you from the local human courts: the mortal legal system's reaction counts as part of the punishment. And when loosely deist types like Unitarians or Stoics say "You can't fight city hall," they seem to mean more "You'd be a fool to try" than "City hall controls all your thoughts and actions."

No, determinism doesn't become a pressing issue until you get to a single omnipotent omniscient 100%-morally-good creator who nevertheless judges and punishes its own creations. (I guess it's true that Edgar Bergen disciplined Charlie McCarthy once in a while, but still....)

And then it continues pressing in a slightly more rumpled way with Newtonian mechanics and its catchy "give me a snapshot and I will predict the world" approach. Since science relies on reproducible results, it naturally tends to talk about what's predictable. And who doesn't want to sound scientific?

Which has led to some odd passes in the humanities, particularly in their popularized forms.

Luckily, behaviorism is out of fashion and Newtonian mechanics is not the only role model around.

In a rare fit of sophistry, Uschanov challenged a non-determinist to show one action that happened without any cause. Although "causality" has its own problems, causality is not the issue here, a conscious decision being as valid (if not as common) a "cause" as gravity is. What's at stake in determinism is predictability. And it's not hard to find unpredictability and causality coexisting peaceably in twentieth-century physics as well as in ordinary language use.

That doesn't mean that "the soul is to be found in quantum undecidability" or any such nonsense. No, the individual actions of an individual human (or canine or feline) mind are indeterminate less because of anything one could call free will -- which usually plays a negligible role -- than because of all the other crap flying around the infinitely intermingled systems of biochemistry, anatomy, self-organizing neural nets, interdependent modular processes, human society, lamp post construction, and so on. If someone converts to Scientology, and we find that on the day of his conversion he suffered a mild stroke, we might say that the stroke caused the conversion. But even if we were told the exact location of the stroke beforehand, would we have been able to predict that conversion? Although Scientologists are undoubtedly working on the problem, I'd still say no.

Decision-making consciousness is much more a fuzzy outline of convenience than a coherent all-powerful unit, but it would be silly to deny its existence on that basis: regardless of implementation details, the bundle of events found within those shifting boundaries do take place, after all, and if a court wants to pay special attention to those events (not all courts do), it can go right ahead. (I might also note that the possibility of rational decision-making is not a particularly comforting thought unless we're all rationally free-willing from a homogeneously shared set of rules and goals. Right here on the web, for example, you can find people rationally free-willing from pretty scary premises.)

Doctrinal free will seems hubristic when not trivial, but strict determinism is about as rigorous as saying that "love draws all objects together": a truly radical skeptic wouldn't affirm something which can neither be refuted nor confirmed by evidence. As a scientific hypothesis, determinism is meaningless, being unverifiable. As an ethical aid, it's meaningless by definition. That doesn't leave anyplace from which it can derive meaning -- except maybe the rosy glow of unwarranted presumption:

| "... simply because I happen to enjoy knowing things most people don't know." -- conclusion of "The Standard Misinterpretation of Determinism" |

| . . . 2001-10-23 |

Errata

Regarding our imaginary adventure, Aaron Mandel worries us:

|

I think the implication of the "A in B" title scheme is not just that

everything turns out okay, but that A, the sympathetic star of some

extended series of episodes, comes out of B essentially unchanged. At

least, that's why I found CNN's slogan unnerving: it's reversed.

And, less whimsically, it implies gently that the anthrax came from outside America, while I'm starting to hear serious mumbles to the effect that the perpetrators may have been domestic terrorists. |

While, regarding our Worst Episode Ever, Lawrence L. White reassures us:

|

Locating predictability as the turning

point is, dare I say it, Wittgenstein-like: if you can't move the rock,

find a different spot for the lever. One consideration: note that the

legal problem is only interested in things after the fact. Examples from

law seem tainted with a particular pathology, akin to the pathology of

taxation-phobic voters preferring to spend more on punishing folks than on

the less expensive & more effective (crime-prevention wise) technique of

educating them.

I liked the entry because I have what Wittgenstein characterized as the philosophical illness. & I felt bad to think I might have infected you. I am, in part from the Wittgenstein treatment, mostly free of such vexations. Meaning I wouldn't think to try to think about those things again. Which is another reason I find Uschanov fascinating. In his case the medicine stokes the disease. |

To retreat to ordinary English usage: Could an individual change something that's already happened? No, that doesn't seem meaningful. Could she change something that hasn't happened yet? Certainly not -- if it doesn't exist, it can't be changed. Can she be part of something happening? Certainly she can, and the only way around that is to radically redefine what "she" refers to. That's where "free will" comes in, but (unlike schools) courts can have nothing to say about the present tense (except "I object!" or such like)....

As for personal regret, the "philosophical illness" holds even less terror than anthrax. No, what really bothered me about my post was first, that it seemed misshapen as argumentative prose, and second, more damningly, that it seemed redundant: that I'd added nothing to what was already available, even if we restrict ourselves to the Web.

Referring to the Bill of Artifactual Values kept in my wallet at all times:

| . . . 2001-11-04 |

And Ross Nelson draws several recent threads together by sending me a reminder of Wittgenstein's poker and Popper's riposte.

Ah... Austrians, violence, academic bitchiness, moral rules, and a typical opportunity for exercise of free will: it's completely up to the reader to decide which story to believe precisely because each is so equally, miserably, trivial.

| . . . 2001-11-08 |

One reader adds to our dossier on glassy, gassy Thomas Bernhard:

"Woodcutters is a fix-up of the last ten pages of Wittgenstein's Nephew from 2 yrs earlier, where it is not Bernhard but the titular Paul Wittgenstein who provides the final blow (missing from the novelization): 'You too have become a victim of the imbecility and intrigues and underhand dealings that go on at the Burgtheater. It doesn't surprise me. Let it be a lesson to you.' This suggests that Bernhard was too socialized for his own good and hated himself for it."While another adds to our ball of confusion:

"#17140\WA IcePrincess"

| . . . 2001-12-22 |

By writing out a puzzle, Lawrence L. White finds the solution (and as a side-effect shares it):

In the latest issue of Context a piece on Kathy Acker brought to mind an old problem.Ms. Wheeler talks a lot about Acker's ambitions (to change the world w/words) but not much about how she carried it out. & the ambitions are read straight off. Any clever workshop student knows "show, don't tell," and, as Marx put it, "every shopkeeper can distinguish between what somebody professes to be and he really is."

To be fair, there's a lot to say about stated intentions. You can at least repeat them. There may not be that much to say about the means art uses. & of the little that has been said, most of it is painfully obvious. But maybe that's okeh. Maybe there should be less talking about poems & more reading them.

Then I noticed how I had not managed to avoid my own problem. Wittgenstein says, somewhere, something like what is needed is not new theories but reminders of what we wanted to do in the first place. So reminding yourself which problems are bothering you might not be a complete waste of time.

Better yet, why not make talk about poems as interesting as the most interesting poems?

| . . . 2002-08-28 |

Whereof one cannot speak, thereof one must gesticulate wildly

|

| . . . 2003-12-05 |

From an interview with Richard Butner:

I once had a creative writing teacher tell me that he didn't understand why authors used science fiction or magical realism to tell a story or impart a theme. Why do you think we do, when good old realism might do the trick?

"I sit with a philosopher in a garden; he says again and again 'I know that that is a tree,' pointing to a tree that is near us. A second man comes by and hears this, and I tell him: 'This fellow isn't insane: we're only doing philosophy.'"

--Wittgenstein, On Certainty

| . . . 2003-12-07 |

Francis at the Mitchell Brothers Theater

Lawrence L. White extends the popular series:

Jessie Ferguson is okeh with lack of consensus in "pure aesthetics," but how are you going to keep it pure? & I'm not talking about keeping the non-aesthetic out of aesthetics: how do you keep the aesthetic out of sociology, history, etc?I like those questions.I have this idea (one that I can't explain or justify to any acceptable degree) that it's all about poesis, that is, "making" in a general sense, "creation," as in things made by humans. Things such as society. That the poem and the economy are variants (mostly incommensurate) of the same drive. That "I'm going to put some stuff together" drive. To go kill me that deer. To clothe my child. To flatter the chief. To exchange for some stuff that guy in the other tribe put together.

Does this insight have any practical application? Not that I relish exposing my reactionary tendencies (yet again), but among those practicing sociological versions of aesthetics, the cultural studies crowd, I'd like less of the scientistic model — let me tell you how things are!— and more of the belletristic model — here's something I wrote! I would like to practice good making in criticism. (& as an inveterate modernist, I'm willing to call obscure frolics good making.)

But what of socio-economics? Is that supposed to be more like a poem, too? Perhaps there are other models. If I can throw out another murky notion to cushion my fall, Wittgenstein seems to say as much when he speaks of "grammar." I always took that term to contain potential pluralities, as if every discipline had a somewhat distinct way of talking, of presenting evidence, making inferences, etc. Which is not to say everything goes. He also spoke of needing to orient our inquiries around the "fixed point of our real need." Not that there's much consensus on that. But let me offer a suggestion: the inability of the English Department to come to a "consensus" severely debilitates its ability to ask for funding. Because the folks with the purse do want to know what exactly it is that you do.

Let me try it from another angle, through another confusion, this time not even so much a notion as a suggestion. Allen Grossman, the Bardic Professor, once reminded us that "theater" and "theory" have the same root. He, too, seems not to have said something Bacon didn't know. Grossman, though, as a reader of Yeats & Blake, wouldn't take it where our Francis wants to go. Perhaps the Baron Verulam's heart might be softened by this plea: isn't the point of the socially inflected sciences to make things as we "would wish them to be"? For example, don't the "true stories out of histories" serve to help us order our current situation, despite Santayana's overstatement of the case? (John Searle once told us in lecture that the drive behind philosophy was nothing more radical than simple curiosity. I found the answer to be unsatisfying philosophically and reprehensible politically.)

To add a trivial one, though: Hasn't the English Department's problem always-already been self-justification? Poetry and fiction weren't very long ago exclusively extracurricular activities, and it takes a while to explain why it should be otherwise. Isn't jouissance its own reward? Or do students pay to be titillated and spurred forward by the instructors' on-stage examples? (No wonder consensus isn't a goal.)

| . . . 2003-12-13 |

Francis on the Verge of the Shadow of the Oversexed Women

David Auerbach writes:

... as far as LL White's words go, I do find it interesting (in purely non-judgmental fashion!) exactly how mind-independent the grammars of humanities academics have become. I.e., to see a "grammar" of study where interpretation of what the words actually mean is so pluralistic as to have no significance to the work itself, and thus most purely embody Wittgenstein's concept of a language not being able to contain a meaning /intended/ by the speaker, you would go straight to technical academic work. I don't just mean the cultural Marxist stuff spouted by Jameson, but someone like Marjorie Perloff, for whom the placement of certain key terms ("private language" comes to mind) in seemingly arbitrary fashion acts as legislation of the use of those terms in future theses, dissertations, and books--again, without having any logical derivation from the past or to the future! (Logic and rationality being the writer's intention, not an intrinsic property of the writing.) I don't see "consensus" as being on the menu any more than "argument" is (both being elements of rational discourse); there is only the grammar.(See also.)I don't mean this as an exaggeration or a caricature: spend some time in the more happening fields of the humanities and there's a noticeable lack of good arguments.

What scares me is how little self-justification seems to exist these days, not how much. Those able to promulgate the grammar in productive (vs. non-productive) ways are accepted into the fold and the game continues, with very little heed paid to what material sustenance is required to keep the game going. Reality should intervene in the next decade or two and remove many of the participants, and our assistance isn't required there.

But I don't feel especially happy after talking about this stuff, so onto "In the Shadow of the Oversexed Women"!

| . . . 2004-01-13 |

Flogging the Dead Bardic Mule, cont.

Lawrence La Riviere White again:

Regarding Auerbach's response to my response, I will cop to the charge. I have made my project (if something pursued so diffidently could be called a "project") exactly that, mind-independent poetry and language. Or rather, the limits of mind-independence in poetry and language, just how far can that hypothesis, that the poem comes from "Outside," that writing creates rather than communicates meaning, go. But before sentencing, I would like to make two comments in my defense:It sounds as if White's post-adolescent poetic tastes changed in a way somewhat like my own. Not uncommon, I think, and resembling the common move from "readerly" fiction to "writerly" fiction. In both cases, we exchange some of the mixed pleasures of heroic identification for the mixed pleasures of ethical socialization (or, if you prefer, of obtuse alienation -- or, if you prefer, of an even more pathetic form of heroic identification). I maintain John Berryman and James Wright as more-than-usually mixed pleasures from the bad old days, but Yeats plays well enough in both camps.1) I believe I come by my declaration of mind-independence honestly. My attempts to think about writing anteceded my attempts to write. & I began as a very mind-dependent, or to be more explicit, ego-dependent poet. Suffice it to say that my poetry hero was Robert Hass. I know what you think about that. But somewhere a switch took place. At the start I had come to poetry wanting something from it (I wanted to become through poetry a voice of wisdom, someone who sounded deep & therefore attractive (it was California!)), but then poetry started wanting things from me. One of the first things was wanting me to write better poems. (Other poems showed me how bad my poems were.) Which would require more understanding of what poetry is. (I needed to focus more on my instrument and less on the feelings I wanted to express.) There's the Yeats line about "the supreme theme of art and song" which flickers between the genitive and the substantive, between the great romances that poetry writes about (e.g. Motley Crue's "Girls Girls Girls") and the great romance that is poetry itself. I know I have a weakness for romantic claptrap (that Yeats-peherian rag, so transcendent, so grandiloquent), but it felt as if poetry told me its concerns, its needs, were greater than mine. Ask not what poetry can do for you, ask what you can do for poetry.

2) Given Auerbach's evidence against me, I would gladly roll over on the ring-leader, Marjorie Perloff, but as I always say (& I do repeat myself more & more), you can't fight dumb with dumb. & generalities are dumb, much dumber than (most) of the people who use them. I don't believe, & neither do many (not all, perhaps not even most) true Wittgenstein scholars (that is, people who really know, unlike me), that he had a "concept of a language not being able to contain a meaning intended by the speaker." I think what he did have was an aversion to hypotheses such as "the meaning of language equals the intention of the speaker." Which seems to be what Auerbach is saying (although I could very well be wrong here) when he says, "logic and rationality being the writer's intention, not an intrinsic property of the writing." Analytic philosophy (which is one sector of the humanities that has fiercely quarantined itself from the rest) is a graveyard of such hypotheses.

& to bring a little focus to my oh-so-vague notion of "grammar," I would never consider any useful grammar to be purely self-referential. Useful grammars glom on to problems, that is, resistance points. Things that have eluded all previous formulations, that constantly call for new formulations (grammars being generative). Now I'll admit that tenure is a problem, but such a tedious one! Not nearly romantic enough for my taste.

Yes, there are few good arguments in the humanities. But perhaps we could find a virtue in this (can I get any fuzzier? As I have said from the start, I am guilty!), or at least we could become virtuous enough to stop pretending we're arguing, stop claiming the kind of conclusiveness, the kind of judgmental righteousness, that is the just reward of a good argument. Am I just applying more fuzz-tone & vaseline on the lens? Consider this: my introduction to the humanities was through analytic philosophy. Now those people are sticklers for argument. & what does it get them? Remember the joke from Annie Hall, how his mom couldn't think about suicide because she was too busy putting the chicken through the deflavorizing machine? That's analysis for you. One big deflavorizing machine. For all their rigor, they don't end up, at the close of the year's accounts, having contributed any greater number of interesting or useful essays than the cultural critics.

When I said I'm guilty, I meant (my intention!) I agreed w/most of what he said. I let the criminal trial conceit carry me away a bit, or rather I let the conceit reveal more of my defensiveness, resentment, & meanness than I care to show. After all, I write to create a better (smarter, more graceful & considerate) persona than I communicate in the day to day.

As for our agreement, when he says, "I don't feel especially happy about talking about this stuff," I take it for a different version of "dumb can't beat dumb." Not that he's calling himself dumb, but his unhappiness in the engagement reminds me of my own, & my unhappiness comes from not being able to figure out the right answer, to figure out the new way of thinking about the problem, the answer that will solve our troubles (& "our" includes those inside & outside the academy). As if it were all janitorial work & you couldn't ever get the grease off your hands.

In closing, let me say the single phrase "In the Shadow of the Oversexed Women" is better than everything I've ever written you. That's the kind of joke I admire. I have been thinking ever since, but not productively, about how translation is ripe for such jokes. (The tag that keeps ringing in my head is Eliot's "hot gates" for Thermopylae, the low for high substitution.) The Proust-work feels like it's on to something profound. (There's a type of deflavorizor, the guy who always wants to explain the importance of the joke.)

Here's all I've come up w/so far: translation is an example par excellence of applying a grammar to a problem & how that problem has the resources to ceaselessly resist the grammar. The deconstructive lesson (what gets taught as deconstruction) is all about vertiginous glee (nobody knows nothing), but that blankets over certain palpable sharpnesses i.e. the words "strapping, buxom, oversexed" all have nice edges to them. The joke is on us & not the French, but it took Proust to bring it out.

& hints of a vision of a pluralist utopia form on the screen. Starring the Marx Brothers making their translation from borscht to blue-bloods.

+ + +

Regarding yesterday's entry, here's bhikku:...which reminds me of the joke about the two Irishmen passing the forest and seeing the sign saying Tree Fellers Wanted. "Isn't it a shame", says Pat, "that there's only the two of us."

... to be continued ...

| . . . 2004-03-08 |

One possible definition of a function is that it is something whose implementation can be seen as 'contributing to the survival and maintenance of an organism'. But this gets us into the familiar circularity that bedevils ('classical') evolutionary functionalism in biology as well:

- The fact that an organism is alive at a given time shows that it is 'fit to survive'; i.e. 'this (living) organism is fit' is analytic.

- In the case of an organism that has failed to survive, the only ones where we actually know the precise cause of extinction usually do not give evidence of maladaptation in the usual sense: i.e. for the immanent 'unfitness' of the organism itself. ... If for instance the passenger pigeon had been maladapted, it would not have been as common as it was; by all criteria except edibility and vulnerability to shot it was a superbly adapted and successful organism. It is rather the case that human technology and greed were such that nothing could have survived under those precise conditions.

* * *

I suggest that what really counts, the first reason we have for believing in the potential fruitfulness of a type of explanation, and for holding onto it in the face of a lack of obvious warrantability (or even in the face of evidence that it makes no sense) is some kind of criterion of INTELLIGIBILITY, which serves as a quasi-esthetic control on the evaluation of explanations. I think that we often judge (what we call) the 'explanatory' power of a statement or model on the basis of the PLEASURE, of a very specific kind, that it affords us. This pleasure is essentially 'architectonic'; the structure we impose on the chaos that confronts us is beautiful in some way, it makes things cohere that otherwise would not, and it gives us a sense of having transcended the primal disorder.- On Explaining Language Change, Roger Lass

Functionalist arguments tend to take the following form:

But maybe the trait has nothing to do with functionality, except insofar as it didn't kill all humanity before breeding age. Or maybe there were a wide range of functional possibilities, in which case we still haven't explained why this is the one we got. Or what we see is non-optimal genetic detritus left by something that was once optimal in some way we can't imagine. Maybe it's genetic cruft that became optimal later. Maybe some eccentrics somewhere don't show the trait and yet still somehow manage to be classified as human.

Prehistory involved too many unknowable factors, human culture is too volatile and varied, and confirmation or disproof is too unlikely for the hypothesizing of "evolutionary psychology" and "evolutionary sociology" to be much better than a Just-So Story.

As a harmless diversion, is it at least no worse than a Just-So Story?

That would depend on just how the diversion is used. A fable which asserts inevitability and hierarchical value with the language of psychology and ethics might be handy in all sorts of situations. Greed and selfishness aren't personal failings or noxious to society; they're the fucking foundations of life itself, boy!

And who's going to argue with life?

You and what army?

on a rollUnfortunately I'm unsure whether that's as in "One tuna melt..." or as in "...of a die will never abolish chance." Both are possibilities according to another reader, who's drilled for a while without striking bedrock:

Greed and altruism both, and your mom's combat boots and the propensity to screech at pain and the stoic impulse, nature plays dice, late at night, when God sleepsAlmost makes me wonder if life even needs foundations. Doesn't a foundation tend to limit mobility? The poor thing's loaded down with baggage as it is.

Lawrence L White seconds my query:

An excellent question! As I'm always saying (& I have noticed the repeating myself thing, & I am dreading how this will get worse as I get older), if there's one thing I learned from Wittgenstein, it's "don't forget why you're asking the question in the first place!" What problem are we trying to solve?Ah, that hard-nosed goal-driven Wittgenstein.... Is a hint to be found in this reader's suggestion?

Life is an undergarment

but what about the survival and maintenance of an orgasm?Dr. Lass doesn't touch on that topic, but Dr. Funkenstein covers it somewhere —On Provoking Language Change, maybe? Speaking of whom:

George Clinton was a functionalist and look where it got him

Actually, I think the good Doctor is, like me, more of a believer in Cosmic Slop and Mother Wit.

| . . . 2004-03-17 |

I read once of a man who was cured of a dangerous illness by eating his doctor's prescription which he understood was the medicine itself. So William Sefton Moorhouse imagined he was being converted to Christianity by reading Burton's Anatomy of Melancholy, which he had got by mistake for Butler's Analogy of Religion, on the recommendation of a friend. But it puzzled him a good deal.- Note-Books, Samuel Butler

Wittgenstein (I may only have 1 pony but you can't make me stop riding him!) wanted to use a quotation from Bishop Butler (who wrote the Analogy) as the motto for the Philosophical Investigations: Everything is what it is & not something else. Which leads to the musical question: who has more respect for difference: the one who feeds everything into the differance meat-grinder, or the one who takes a look at each individual thing & asks, how is this unique? & after that number we have the big dance scene.

LW ended up settling for a quotation from this guy named Nestroy (these Germans & their library-full of culture!), which went something like, "Everything that appears to be a great step forward later tends out not to have been as big a deal as you thought." I'm not sure how I'm going to block out that scene.

| . . . 2004-07-08 |

Whereof one can speak, thereof one must natter on and on until everyone is sick of the subject.

Well then natter, for Pete's sake!

| . . . 2005-10-25 |

The Transition to Language,

ed. Alison Wray, Oxford, 2002

If DNA analysis has secured the there-that's-settled end of the evolutionary biology spectrum, language origins lie in the ultra-speculative. As a species marker and, frankly, for personal reasons, language holds irresistable interest; unfortunately, spoken language doesn't leave a fossil record, and neither does the soft tissue that emits it. In her introduction, Alison Wray, while making no bones about the obstacles faced by the ethical researcher, suggests we use them as an excuse for a game of Twister.

Advanced Twister. Forget about stationary targets; the few points of consensus among Wray's contributors are negative ones:

The most solid lesson to take away from the book is a sense of possibility. Such as:

****WARNING: SPOILERS****

I think it was David Hume who defined man as the only animal that shoots Coca-Cola out its nose if you tell it a joke while it's drinking. In all other mammals, the larynx is set high in the throat to block off nasal passages for simultaneous nose-breathing and mouth-swallowing.

The same holds for newborns, which is why they can suckle without pausing for breath. In about three months, our larynx starts moving down our throat and we begin our life of burps and choking. About ten years after that's finished, boys' voices break as their larynxes lower a bit more.

Aside from the comic potential, what we gain from all this is a lot of volume, a freer tongue, and a much wider range of vowel sounds.

Got that? Good, because it's wrong! In the year 2000 Fitch realized that dogs and cats sometimes manage to produce sounds above a whimper. Embarrassingly, living anatomy's more flexible than dead anatomy. When a barking or howling dog lifts its head, its larynx is pulled about as far down its throat as an adult human's, thus allowing that dynamic range the neighbors know so well.

However, humans are unique in having a permanently lower larynx.

Almost. As it turns out, at puberty the males of some species of deer permanently drop their larynxes and start producing intimidating roars as needed.

Why would evolution optimize us for speech before we became dependent on speech? To generalize from the example of deer and teenage boys, maybe the larynx lowered to make men sound bigger and more threatening?

That would explain why chicks dig lead singers. It fails to explain why chicks particularly dig tenors, or why chicks can talk. As has happened before in science, I fear someone's been taking this "mankind" thing a bit too literally. Mercifully, Fitch goes on to point out that in bird species where both sexes are territorial, both sexes develop loud calls, and so there may be a "Popeye! Ooohhhh, Popeye!" place for the female voice after all.

On a similar note....

We understand how a vocabulary can be built up gradually. But how can syntax?

Having, like the other contributors, rejected genetic programming as an option, Okanoya thinks syntax began as a system of meaning-free sexual display before being repurposed: grammar as melody. The bulk of his article is devoted to the male Bengalese finch, each of whom hones an individualized song sequence over time, listening to its own progress rather than relying on pure instinct or pure mimicry — sorta like how a cooing babbling infant gradually invents Japanese, right? Right?

Drifting further from shore, Okanoya speculates that "singing a complex song may require (1) higher testosterone levels, (2) a greater cognitive load, and (3) more brain space." And a bit further: "Since the ability to dance and sing is an honest indicator of the performer's sexual proficiency, and singing is more effective than dancing for broadcasting...." And as we wave goodbye: "... the semantics of a display message would be ritualistic and not tied into the immediate temporal environment and, hence, more honest than the news-bearing communication that dominates language today."

As an aesthete, I'm charmed. As a skinny whiney guy with a big nose, I'm relieved to learn that Woody Allen really was the sexiest man in the world. And yet why does Mrs. Bush exhibit more coherent syntax than Mr. Bush? Does she really have more testosterone?

Perhaps we could broaden the notion of "sexual display" a bit. In a communal species, wouldn't popularity boost one's chance at survival and reproduction regardless of one's sex?

At any rate, Okanoya's flock of brain-lesioned songbirds should win him a Narbonic Mad Science Fellowship.

A straightforward "everyone said that only human beings can do this but actually monkeys can do it too" piece. In this case, monkeys can learn how to enter a seven-digit PIN on a cash machine which changes all the positions of the buttons every time they use it, except it's a banana-pellet machine and photographs instead of digits. An ominous aside: "It is doubtful, however, that the performance described in this study reflects the upper limit of a monkey's serial capacity."

Towards the end, Terrace refers to recent research on language kind-of acquisition among bonobos. Unlike the common chimps on whom we've wasted so many National Geographic specials, bonobo chimps can learn some ASL and English tokens purely by observing how humans use them. Still, there's no evidence that their use reflects anything more than hope of reward. When it comes to utterly profitless verbiage, humanity still holds the edge!

The candidate's a waffling policy wonk. Unelectable.

Language has words and grammar; communication has expressions. Wray focuses on units of expression which we never consciously break down into units of language, claiming that "a striking proportion" of formulas, idioms, cliches, and Monty Python recitations are manipulative or group defining signals rather than informative messages.

What we call "communication" among non-human species consists pretty exclusively of such signals, and so it is puzzling that human language doesn't deal with them more directly and efficiently. Wray's solution to the puzzle supposes a protolanguage that was all message, no words: "layoffameeyakarazy", "voulayvoocooshayavekmwasusswar", and so forth.

As any walk through a school cafeteria will remind us, the expressivity available to holistic formulaic language is pretty limited, which (says Wray) is why homonids stayed stuck in a technological rut for a million years. Meanwhile, analytic language developed slowly and erratically as a more or less dispensible, but very useful, supplement to holistic utterances.

Until it, um, became all we had and we were forced to cobble together holistic messages in our current peculiar way.

Thump. On the holistic side, there are tourists' phrasebooks, aphasics who can memorize (but not create) texts, and pundits who quote and name-drop in lieu of comprehension. But it seems problematic to claim that language derives from the holistic. On the contrary, Wray's evidence indicates that, although the need is there, language does a pretty poor job of meeting it.

A prole in a poke. Despite the title and the opening citation from Marx & Engels, Knight's worried about how materialism might have blocked the development of language.

Human children become more linguistically skilled when treated pleasantly by their parents, but other great apes don't show much affection towards their offspring. Similarly, there wouldn't be much reason to learn language in a culture where everyone lied all the time, but a gorilla's most altruistic and cooperative signals tend to be the exclamations it can't repress. Homo nonrepublicanis is the only ape to evolve sincerity.

What caused this awful mishap? Well, Chris Knight has this theory that all of human culture all over the world began when women's genetic material realized that they'd have a better chance to win the Great Game if men couldn't tell when they were menstruating, since the men's genetic material would be inclined to seek out more reproductively active genetic collaborators at such times. Ding-dong, Red Ochre calling! — and the rest is history.

As for language? Hey, didn't you read the part about this explaining "all of human culture"? Isn't language part of human culture? Q.E.D.

[Inclusive as Alison Wray strove to be, some hurt feelings were bound to occur, and as far as preposterous anthropological mythmaking goes, Eric Gans may beat Knight. For one thing, Gans's story would be easier to get on the cover of a science fiction pulp. For another, it emphasizes the inhibitory aspect of non-mimetic representation.

For a third, it deals with a central riddle of language evolution (as opposed to the evolution of language). Some linguistic changes seem reliably unidirectional. For example, highly inflected languages are harder to learn than subject-verb-object ordered languages; when cross-cultural contact (or cultural catastrophe) occurs, languages downgrade inflection in favor of word order; and there are no known examples of a order-based language evolving more reliance on inflection.

So where did those inflected languages come from? An even more inflected, difficult, and unwieldy language? That doesn't sound like a very practical invention.

Gans has a simple fix: Language wasn't meant to be practical. Luther and Tyndale shouldn't have gotten so exercised over Greek New Testaments and Latin Masses; incomprehension's the original sacred point.

Not that I believe any of this. I just think it's cool. Jock-a-mo fee-nah-nay.]

A lucid summary of Corballis's recent work without its iffier aspects.

All of this suggests (or at least doesn't disprove) that "language" could've evolved gesturally long before it became vocal. Once audible intentional vocalizing was biologically possible, there'd be good reasons to switch: yelling would cover a wider distance; semantic tokens would be more stable; it would allow conversation during tool manufacture and use.

And as proven by infants and tourists, it's possible to add vocalization gradually to gesturally based communication, avoiding that awkward "everything at once or nothing at all" scenario.

Thumbs up, as they say.

A glum warning against reading too much into much-too-selected evidence. In this case, the too much is complex planning that would require language's help, and the much-too-selected are so-called "hand-axes" which might, from raw statistical evidence, be accidental by-products rather than intentional products of an industry.

Bickerton wants to get back to the real reason for human communication: better food and plenty of it. As a student of menu French and Italian, I'm in no position to argue.

Actually, he's pretty mild-mannered about it. Elsewhere, he's guessed that syntax is rooted in reciprocal altruism. But since there's no evidence that hominids dealt with any more social complexity than other primates, he doesn't believe social conditions alone could've triggered a change as drastic as predicated language.

The conditions which did radically distinguish our ancestors from their primate relatives were environmental. Instead of living large in the forest, hominids roamed savannahs full of predators and fellow scavengers, and did so successfully enough to expand out of Africa. Also, unlike the socially-focused great apes, humans are capable of observing and drawing conclusions from their surroundings. (To put it in contemporary terms: Driving = environmental interaction; road rage = social interaction.) Any ability to observe and then to reference would be of immediate use to a foraging and scavenging species. Predication might develop from a toddler-like combination of noun and gesture ("Mammoth thisaway"), and lies would be easily detected and relatively profitless.

It's unlikely that human language's primal goal was to accurately communicate an arbitrary two-digit number. At that level of abstraction, about all computer simulations can do is disprove allegations that something's impossible. So, ignoring the metaphors, this paper shows it's possible to improve communication of two-digit numbers across generations of weighted networks without benefit of Prometheus.

Bringing the metaphors back in, they report that smaller populations and an initially restricted but growing number of inputs are helpful in when establishing a stable "language", and point out that "because many sensory capabilities are not available at birth, the child learns its initial categorizations in what is effectively a simplified perceptual environment." (We'll come back to this in a bit.)

More computer simulation; worse anthropomorphizing. Sponsorship by the Sony Corporation might have something to do with that, and with inviting the public to interfere through a web page and at various museums. The number of breakdowns introduced by this complexity is left vague, but the project seems to have earned a Lupin Madblood Award for Ludicrously Counterproductive Publicity Stunts.

Too bad, because it's a great idea. Instead of modeling perception and language evolution separately, the project combines the two with gesture in an "I Spy" guessing game. Two weighted network simulations have access to visual data through a local video camera, have a way to "point" at particular objects (by panning and zooming), and can exchange messages and corrections to each other. The researchers monitor.

With the usual caveat about how far analogies should be carried, some of the results are enjoyably suggestive. A global view isn't needed to establish a shared vocabulary. Communication can be successful even with slightly varying interpretations and near synonyms. Again, it helps if the initial groups are fairly small, and if the complexity of the inputs increases over time.

Between Creole formation, sign languages, and computer simulations, we now have a few examples of language evolution to look at. Could it be that grammar isn't genetically programmed? Could it be that social conditions play a part in the development of syntactical language!?

Well — yes. But Ragir's attack on genetic programming is kind of a MacGuffin anyway — a good excuse to cover some interesting ground.

Ragir compares nine sign languages, and, where possible, their histories and the circumstances of their users-and-originators. That (limited) evidence shows it's possible for a context-dependent quasi-pidgin to go for some generations. Grammaticalization and anti-semantic streamlining of illustrative gestures seem to happen gradually rather than catastrophically. They're introduced by children rather than adults, and only when peer contact is encouraged. The emergence of syntax is socially sensitive.

Returning to her MacGuffin, Ragir proposes:

that we consider 'language-readiness' as a function of an enlarged brain and a prolonged learning-sensitive period rather than a language-specific bioprogram. In other words, as soon as human memory and processing reached a still unknown minimum capacity, indigenous languages formed in every hominine community over a historic rather than an evolutionary timescale. As a result of species-wide delays in developmental timing, a language-ready brain was probably ubiquitous in Homo at least as early as half a million years ago. [...] As for what triggered the increase in brain size that supports language-readiness...

Here's where we come back to that thing I said we'd come back to. A human newborn is in pretty bad shape compared to the newborns of a lot of other species, and stays in pretty bad shape for a pretty long time. As Nature vs. Nurture combatants seem unable to get through their now-hardened skulls, this lets human infants and children undergo more physical — and specifically neurological — transformation while immersed in a social context.

Although that can be entertaining, maintenance is an issue. And in a savannah environment, dependent on wandering and surrounded by predators, maternity or paternity leaves would be hard to procure. It's nice that our plasticity encourages language, but what would've encouraged our plasticity?

Definitionally, hominids are featherless bipeds. But, as some readers will vividly recall, bipedalism raises a difficult structural engineering problem: If you're going to walk on two legs, there's a limit to how wide your hips can get; narrow hips limit what you can give birth to. Mother Nature's endearingly half-assed solution was to make what we give birth to more compressible.

And since the kids were going to be useless anyway, they might as well be smart.

An attack on the all-or-nothing idea of syntax which so exercised Chapter 3. While we're growing up, syntax development isn't catastrophic, and Burling says it's even more gradual than it looks. Infants comprehend some syntactic clues long before they can reproduce them. And command of syntactical rules continues to grow long after children are reading and writing recognizable sentences. (Hell, sometimes I'm still faking it.) So why think it had to be all-or-nothing species wide?

Another oppositional piece. Did language develop purely from primate calls? Or purely as a representation of our own mental activity as sum fule say? Or purely as an excuse for alliteration?

I threw in that last choice myself, but you see the problem. The options aren't exclusive, and introspection doesn't yield universally applicable results. For example, it may be true that "devices such as phonology and much of morphology" "make no contribution to reasoning" as experienced by Pinker and Bloom and Hurford, but they surely do to mine.

Still, not a bad resource when you're bored by the usual arguments against Sapir-Whorf: If our thinking was determined by language, we'd all be completely batshit.

So, have you heard that Universal Grammar might not be genetically programmed?

Although the impact of their dissent's weakened by its placement, Christiansen and Ellefson do well with the set-up:

Whereas Danish and Hindi needed less than 5,000 years to evolve from a common hypothesized proto-Indo-European ancestor into very different languages, it took our remote ancestors approximately 100,000-200,000 years to evolve from the archaic form of Homo sapiens into the anatomically modern form, sometimes termed Homo sapiens sapiens. Consequently, it seems more plausible that the languages of the world have been closely tailored through linguistic adaptation to fit human learning, rather than the other way around. The fact that children are so successful at language learning is therefore best explained as a product of natural selection of linguistic structures, and not as the adaptation of biological structures, such as UG.

Their eclectic research is held together by one common ingredient: learning an "artificial language" with no semantics outside its visual symbols. This reduces "language" to the ability to pick up and remember an arbitrary rule behind sequences. Admittedly, that's not much of what language does, but it includes some of what we call grammar.

Strengthening the association, in a clinical study, agrammatic aphasics did no better than chance in absorbing the rules behind the sequences. And brain-imaging studies have found similar reactions to grammatical errors, game rule violations, and unexpected chords in music.

Next, Ellefson and Christiansen look at a couple of common grammatical tendencies: putting topic words at the beginning or end rather than the middle of a phrase, for example, or structuring long sequences of clauses in orderly clumps. In both cases, we've picked patterns that reduce the cognitive load. Artificial grammars which followed these rules were learned more easily than ones which didn't, both by human subjects and by computer simulations.

Newmeyer begins by agreeing with the general consensus that you can't tell much about a culture from its language. That doesn't mean there are no major differences between languages, though. Or that there weren't even more drastic differences between prehistoric languages and the languages we know. Or that we really know anything about the prehistoric cultures themselves. Or when language started. Or how often. Or the physical capabilities of the speakers.

In fact, we have no facts. We're fucked.

Heine and Kuteva soldier on, trying to boil down a fairly reliable set of rules for language change and deduce backwards from them. Each visible grammatical element in turn is shown (in the examples they choose) to be derivable from some earlier concrete noun or action verb. The basic principle should be familiar from ethno-etymologically crazed types like Ezra Pound: the full weighty penny of meaning slowly worn down by calloused palms into a featureless devalued token....

Fitting the general tone of the book, though, they close with a warning that their approach is based on vocabulary rather than syntax, and so, even assuming one-way movement away from "a language" consisting only of markers for physical entities and events, we still can't say much about how they might have been put together.

And so, in conclusion, say anything.

Over at the Chrononautic, Ted Chiang suggests an alternative, and perhaps wiser, conclusion: shut up.

| . . . 2007-11-12 |

I find it surprising that you are so sweepingly dismissive of philosophy, as a discipline, frankly. Wittgenstein, Austin, Searle, Dennett, Putnam, Kripke, Davidson, lord knows I can rattle on if you get me started [...] it's all crap, or arid twiddling, you assume? You are, of course, entitled to your opinion. I'm not offended, or anything, but I'm a bit surprised. It's a fairly unusual attitude for someone to take, unless they are either 1) John Emerson; 2) strongly committed to continental philosophy, from which perspective all the analytic stuff looks crap; 3) opposed to interdisciplinarity, per se.- John Holbo, in a comment thread

I have sometimes characterized the opposition between German-French philosophizing and English-American philosophizing by speaking of opposite myths of reading, remarking that the former thinks of itself as beginning by having read everything essential (Heidegger seems a clear case here) while the latter thinks of itself as beginning by having essentially read nothing (Wittgenstein seems a case here). [...] our ability to speak to one another as human beings should neither be faked nor be postponed by uncontested metaphysics, and [...] since the overcoming of the split within philosophy, and that between philosophy and what Hegel calls unphilosophy, is not to be anticipated, what we have to say to one another must be said in the meantime.- Stanley Cavell, "In the Meantime"

I should acknowledge that John's question wasn't addressed to me. Also, that I'm no philosopher. I begin by having read a little, which makes me an essayist — or, professionally speaking, an office worker who essays. I'm going to appropriate John's question, though, because some of the little I've read is philosophy and because essaying an answer may comb out some tangles.

Restricting myself to your menu of choices, John, I pick column 2, with a side of clarification: Although that menu may indicate a snob avoiding an unfashionable ingredient, it's as likely the chef developed an allergy and was forced to seek new dishes. I wasn't drawn to the colorful chokeberry shrubs of "continental tradition" (and then the interdisciplinary slap-and-tickle of the cognitive sciences) until after turning away from "philosophy, as a discipline." Before that turn, I was perfectly content to take Bertrand Russell's word on such quaint but perfidious nonsense.

In fact I came close to being an analytic philosopher — or rather, given that I'd end up working in an office no matter what, being someone with a degree from an analytic philosophy department. On matriculation I wanted coursework which would prod my interest in abstract analysis, having made the (warranted) assumption that my literary interests needed no such prodding. The most obviously abstractly-analytical majors available to me were mathematics-from-anywhere or anglophilic Bryn Mawr's logic-heavy philosophy degree. As one might expect from a teenage hick, my eventual choice of math was based on surface impressions. The shabby mournfulness of Bryn Mawr's department head discouraged me, and, given access for the first time to disciplinary journals, I found an "ordinary language" denatured of everything that made language worth the study. In contrast, the Merz-like opacity of math journals seemed to promise an indefinitely extending vista of potentially humiliating peaks.

Having veered from Bryn Mawr's mainstream major, my detour into Haverford's eclectic, political, and theologically-engaged philosophy department was purely a matter of convenience — one which, as conveniences sometimes do, forever corrupted. I left off the high path of truth: Abstract logic fit abstractions best: natural language brought all of (human) nature with it. As I wrote in email a few years ago, it seemed to me the tradition took a wrong turn by concentrating on certainty to the exclusion of that other philosophical problem: community.

* * *

I'd guess, though, that besides expressing curiosity your query's meant to tweak the answerer's conscience.

At any rate, it successfully tweaked mine. To paraphrase Hopsy Pike, a boy of eighteen is practically an idiot anyway; continuing to restrict one's options to what attracted him would be absurd.

I don't mean I'll finally obtain that Ph. B., any more than I ever became a continental completist. No, I just think my inner jiminy might be assuaged if I gathered some personal canon from the twentieth-century Anglo-American academic tradition.

Cavell, instantly simpatico, will likely be included, but one's not much of a canon. By hearsay Donald Davidson seemed a good risk, and recently a very kind and myriadminded friend lent me his immaculate copy of Subjective, Intersubjective, Objective.

Davidson's voice was likable, and I was glad to see him acknowledge that language is social. But I was sorry he needed to labor so to get to that point. And then as the same point was wheeled about and brought to the joust again and again, it began to dull and the old melancholy came upon me once more. Could these wannabe phantoms ever face the horrible truth that we're made of meat?

With perseverance I might have broken through that shallow reaction, but I didn't want to risk breaking the spine of my friend's book to do it. I put it aside.

And then, John, you tweaked my conscience again:

If you just want a reference to post-Wittgensteinian analytic philosophers who think language is a collective phenomenon and who are generally not solipsists, that's easy: post-Wittgensteinian analytic philosophy as a whole.

Because, of course, my shallow reaction to the Davidson sample might well be expressed as "My god, they're all still such solipsists."

* * *

I remember one other "Farewell to all that" in my intellectual life. At age eight, I gave up superhero comic books.

The rejection was well-timed. I'd experienced Ditko and Kirby at their best; I'd seen the Silver Surfer swoop through "how did he draw that?" backgrounds I didn't realize were collaged. After '67, it would've been downhill.

But eventually, in adulthood, I guilt-tripped back again.

With iffy results, I'm afraid. I greatly admire Alan Moore's ingenuity, but that's the extent of his impact. Jay Stephen's and Mike Allred's nostalgic takes are fun, but I preferred Sin and Grafik Muzik. Honestly, the DC / Marvel / Likewise product I look at most often is Elektra: Assassin, and I look at it exactly as I look at Will Elder.

No matter how justly administered, repeated conscience tweaking is likely to call forth a defensive reaction. And so, John, my bruised ignorance mutters that Moore showed far less callousness than Davidson regarding the existential status of swamp-duplicates — Davidson talks as if the poor creature's not even in the room with us! — and wonders if AAA philosophers' attention to collective pheonomena might not parallel attempts to bring "maturity" to superhero comics:

"We've got gay superheroes being beaten to death! We've got female superheroes getting raped! We've got Thor visiting post-Katrina New Orleans! How can you say we're not mature?"

Because immaturity is built into the genre's structure.

Similarly, whatever it is I'm interpreting as microcultural folly might be the communally-built structure of academic philosophy, and leaving that behind would mean leaving the discipline — as, I understand, Cavell's sometimes thought to have left?

Well, Davidson I'll return to. In the meantime, I bought an immaculate Mind and World of my own to try out. After all, any generic boundaries feel arbitrary at first, and, fanboy or not, I still own some superhero comic books....

Peli:

1) Wilfrid Sellars 2) Grant Morrison [the set is "practitioners who turns the fault of their framing genre into merits by seriously thinking about why they embrace them allowing this understanding to shape their practice"]

John Holbo sends a helpful response:

Quick read before I get on the bus. That comment you quote is a bit unfortunate because, in context, I wasn't actually complaining about Bill not studying philosophy as a discipline. I was objecting to his claim that there was nothing interesting about post-Wittgensteinian Anglo-American philosophy. It has nothing to say about language or mind or any of the other topics that interest Bill. It isn't even worth giving an eclectic look in, to borrow from, in an interdisciplinary spirit. Bill is an interdisciplinarian who makes a point of steering around the philosophy department - not even giving a look-in - when it comes to language, intentionality and mind. I find that combination of attitudes perverse. So rather than saying 'opposed to the discipline' - hell, I'M opposed to analytic philosophy as a discipline (how not?) - I should have typed: 'convinced that it is a giant lump of crap that does not even contain a few 14k bits of goldishness'. Bill and I were arguing about whether there might not be bright spots in post war Anglo-American philosophy. I said yes. He said he assumed not. (He assumes it must all just be solipsism, ergo not helpful.)Another point. "Could these wannabe phantoms ever face the horrible truth that we're made of meat?" I think it's a wrong reading of various fussy, repetitive approaches to materialism and mind to assume that people are shuffling their feet because they are FEARFUL of letting go of, maybe, the ghost in the machine. Rather, they are caught up in various scholastic debates and are hunched down, porcupine-wise. They are anticipating numerous attacks, serious and foolish, pettifogging and precise. In Davidson's case it's always this dance with Quine and empiricism. (I could write you a song.) But shying away from the very idea that we're made of meat isn't it, spiritually speaking. This lot are fearless enough, at least where positions in philosophy of mind are concerned. They're just fussy. (Not that waddling along like a porcupine is any great shakes, probably. But it isn't exactly a fear reaction. It's the embodiment of an intellectual strategy.)

Is that a porcupine or a hedgehog, then.

Reckon it depends on whether you're American or Anglo.

I wish this was the conclusion of a review of The Gay Science, but it's just the conclusion of a review of In Kant's Wake: Philosophy in the Twentieth Century:

In the 100-year struggle for a philosophical place in the sun, analytic philosophy simply won out — by the end of the twentieth century it was the dominant and normal style of philosophy pursued in the most prestigious departments of philosophy at the richest and most celebrated universities in the most economically and politically powerful countries in the world. [However] In Kant's Wake shows that there are some serious unresolved issues about the history of twentieth-century philosophy that every serious contemporary philosopher should be seriously interested in.

Always a pleasure to hear from Josh Lukin, here responding to Peli's comment:

Yeh, that's what's interesting about Morrison, for those of us who believe he succeeds at what he sets out to do: his self-reflexive attitude toward trotting out the Nietzsche and the Shelley and the Shakespeare to justify some old costumed claptrap. My clumsy undergraduate piece about that, "Childish Things: Guilt and Nostalgia in the Work of Grant Morrison," showed up in Comics Journal #176 and is cited here with more respect than it deserves.Looking at comics with a maturity/immaturity axis in mind is great at explaining why Miller's Eighties work is more successful than Watchmen; but it has its limits, not least of which being that we've been down this road before in the superhero stories of Sturgeon, in PKD's (and H. Bruce Franklin's) critique of Heinlein, in Superduperman [find your own damn explanatory link, Ray [anyone who needs an explanatory link to Superduperman probably stopped reading me a long time ago. - RD]], etc. Like David Fiore, I find the Carlyle/Emerson axis (which, come to think of it, has its parallels in Heinlein vs. Sturgeon) to be more fruitful: are we talking fascist superhero stories or Enlightenment superhero stories and, if the former, does the aesthetic appeal of the fascist sublime outweigh the ethical horror?

| . . . 2013-01-25 |

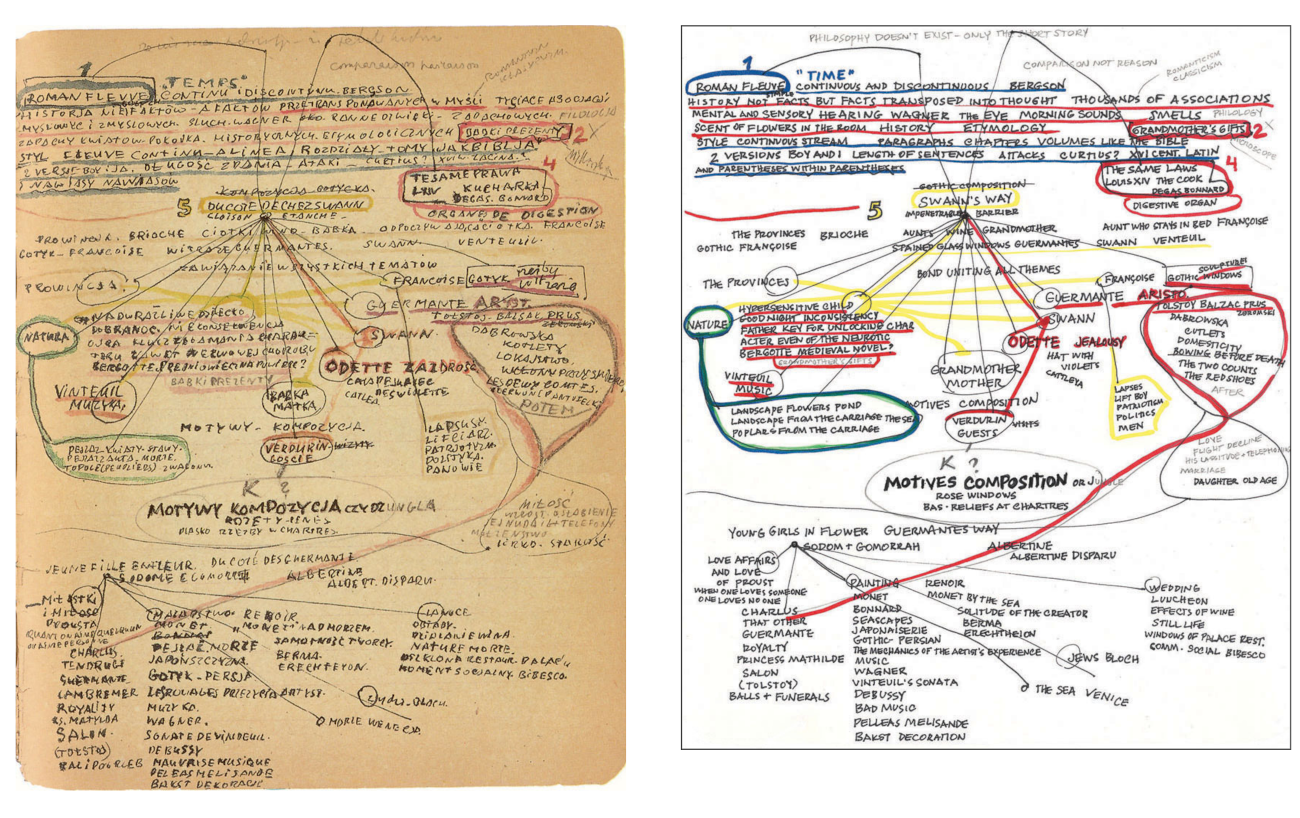

Now we no longer have the idea of a central truth. We now have more of an understanding about the thinking of others, that perhaps there is something in it: maybe it is an interesting angle, an interesting way to illuminate the matter, it's not false but perhaps it's not true either. It is a kind of transformation of language. Not in the sense of Wittgenstein's use of language but in the sense that we each have our own philosophical characters. Philosophy gets closer to what we can call novels; that is, we each have our own characters which we put into motion within one framework, and others have their own novels and characters but we can still read each other's novels.

Along similar lines, for a student in my time and place the great utopias (including Utopia) instruct best as leviathan self-portraits of the philosophers' souls. It's like one of those things where you get asked a bunch of questions and it turns out to be your porn star name.

The Republic could hardly have been more explicit about it. Socrates sets out to describe the just man, but a city is bigger than a man and it's easier to see big things — same principle as walking the school group through a giant plastic alimentary tract. Plato isn't promising to make the poets shut up. He's promising to make the Laws duller than the Phaedrus.

| . . . 2020-05-03 |

Diagrams are in a degree the accomplices of poetic metaphor. But they are a little less impertinent — it is always possible to seek solace in the mundane plotting of their thick lines — and more faithful: they can prolong themselves into an operation which keeps them from becoming worn out. [...] We could describe this as a technique of allusions.- Figuring Space (Les enjeux du mobile) by Gilles Châtelet

- Ancient and Modern Scottish Songs, Heroic Ballads, &c., collected and edited by David Herd, 1776 |

Peli Grietzer and I are separated by nationality, education, career, three decades, and most tastes.1 Our fellowship has been one of productive surprises (rather than, say, productive disputes). And when Peli began connecting machine deep-learning techniques to ambient aesthetic properties like "mood" and "vibe," the most unexpected of the productive surprises represented a commonality:

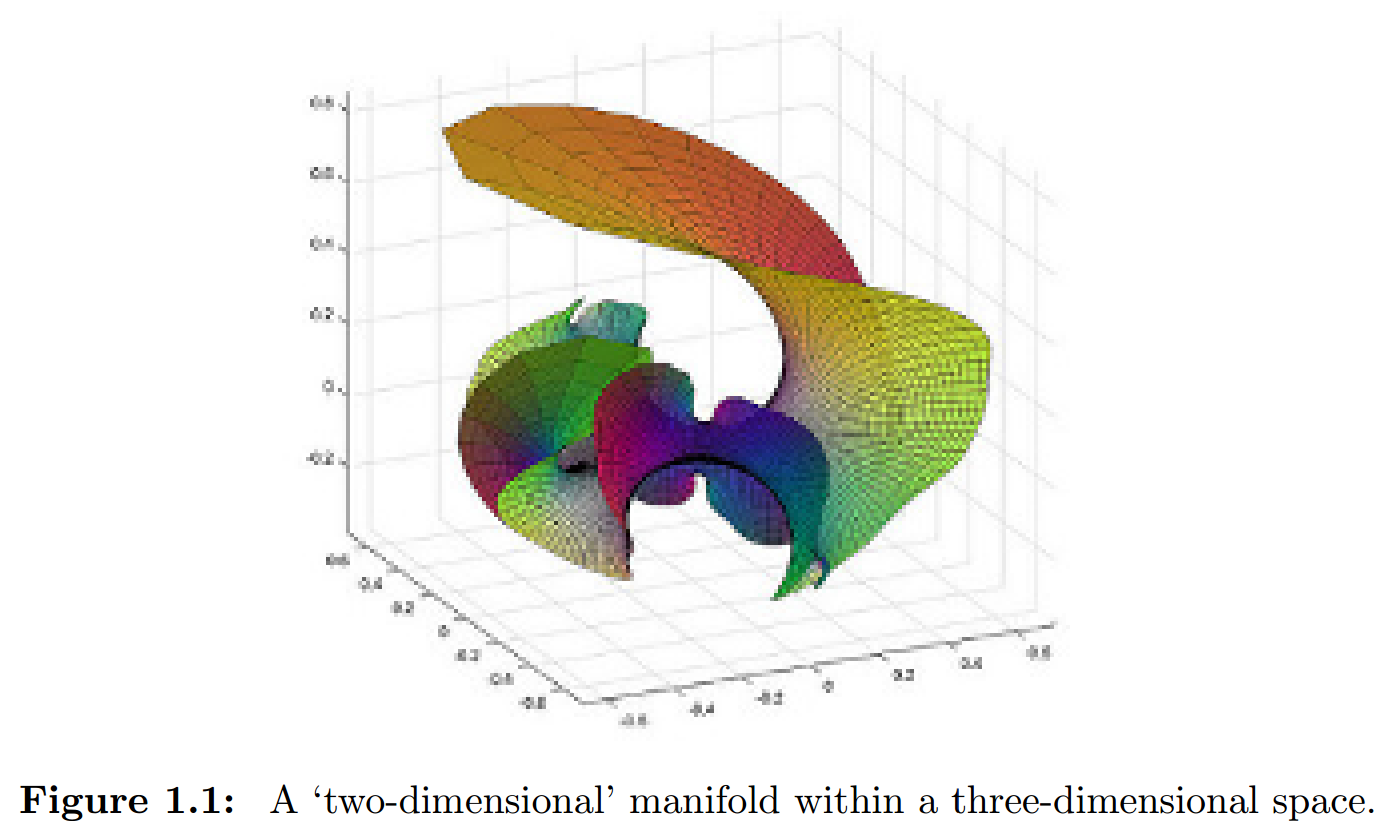

In relating the input-space points of a set’s manifold to points in the lower dimensional internal space of the manifold, an autoencoder’s model makes the fundamental distinction between phenomena and noumena that turns the input-space points of the manifold into a system’s range of visible states rather than a mere arbitrary set of phenomena.

"A Theory of Vibe" by Peli Grietzer

(If Peli's sentence made absolutely no sense to you — and if you can spare some patience — have no fear; you're still in the right place.)

For two-thirds of my overextended life, I've carried a mnemonic "image" (to keep it short) with me. It manifested as a jerry-built platform from one train of thought to another, satisfactory for its purpose, and then kept satisfying as a reference point — or rather, to maintain its topological integrity, as a FAQ sheet. It guided practice and (if suitably annotated) warned against attractive fallacies. It didn't overpromise or intrude; it answered when called for. Over time it became mundane, self-evident, not worthy of comment. Although I've described its assumptions, its space, its justifications, and its uses, I never did quite feel the need to describe it.

My "image" had emerged from a very different place than Peli's, carrying a different trajectory and twisting out its own series of crumpled linens. What I felt in late 2017 was not so much the shock of recognition as the shudder of recognition with a difference: not a registration error to correct in the next printing so much as a 3-D comic which was missing its glasses. Still, though, recognizably "the same," as if Peli had passed by the smoke and noise of my informal essayism and assembled something resembling the ramshackle contraption which belched them out.

Encounters with an opinion or sentiment held in common always seem worthy of remark, being always scarce on the ground. But some remarks are worthier than others, and greeting years of hard labor with a terse "Oh yes, that old thing," like I was a crate of RED EYE CAPS or something, would be a dickhead move. And so there came upon me the happy thought, "Since Peli has shown his work, it's only fair for me to show mine."

1 Some divergences in taste might be artifacts of our temporal offset: William S. Burroughs and Alain Robbe-Grillet anticipated Kathy Acker and Gauss PDF about as closely as a low-budgeted teenager outside a major cultural center might have managed in the late 1970s, and my side of our most eccentric shared interest, John Cale,2 was firmly established by age nineteen.

2 "Moniefald," in this instance, being more or less synonymous with "Guts."

- "Psyche" by Samuel Taylor Coleridge |

Over the following two-plus years I reluctantly came to realize that "showing my work" may have been an overambitious target: the work that went into that "image" consisted of my life from ages three to twenty-two; the work that's come out of that "image" contains most of my publications and most of what I hope to publish in the future.

That it is useless

Given the evidence of my résumé — a mathematics degree "from a good school," followed by 35 years of software development — you might think it friendlier of me to contribute to Grietzer's research program than to reminisce about my own. Which goes to show you can't trust résumés.

I programmed only to make a living, and every time I've tried to program for any other purpose, no matter how elevated, my soul has flatly refused. "We made a deal," my soul says. "A deal's a deal, man."

Anyway, I don't think the software side of things matters much except as conversation starter or morale booster. Computerized pattern-matching can be useful (and computerized pattern-generation can be entertaining) in their own right, but I'm in the camp who doubts any finite repeatable algorithmic process carries much explanatory power for animal experience, unless as existence disproof that an omnisciently divine watchmaker or omnipotently selfish gene are required.

As for mathematical labor, the work-behind-that-"image" includes the romantic history of my coupling with mathematics: meet-cute, fascinated hostility, assiduous wooing, a few years of cohabitation, and finally an amicable separation. I sometimes think of my "image" as a last snapshot of the old gang gathered round the table before we were split up by the draft and divorces and emigrations.

I haven't spoken to mathematics since 1982. Getting properly reacquainted would take as much effort as our first acquaintance, and, speaking frankly, if I live long enough with enough gumption to repursue any disciplined study, I'd prefer it be French.

I am, however, OK with bullshitting about mathematics, and have very happily been catching up on the past forty years of philosophy-'n'-foundations. Turns out a lot's happened since Hilbert! (Yeats studies, on the other hand, haven't budged.)

That it is trivial

As mentioned above, I never felt a need to describe my "image," much less ask who else had seen it. Once I started looking, the World Wide Concordance easily found some pseudo-progenitors and fellow-travelers. Maybe my exciting personal discovery was something which virtually everyone else on earth already had, unspoken, under their belts, like masturbation, and my reaction to Grietzer's dissertation was a case of "shocked him to find in the outer world a trace of what he had deemed till then a brutish and individual malady of his own mind."

That it is redundant

Also as mentioned above, I've touched or traversed some of this ground before, and came near as dammit to sketching the "image" itself in a summary of development and beliefs which still pleases me by its concision. And here I sketched the transition from positivist analytic philosophy to pragmatic pluralism; there I described the heady combination of Kant and acid; a bit later I wrote all I probably need to write about aptitude and career....

Much of my dithering over this present venture comes down to fear that I'm taking a job which has already been perfectly adequately done, and redoing it as a bloated failure. God knows there are precedents.

That it is

There are two senses of "showing the work" in mathematics. What's typically published is a (proposed) proof, an Arthur-Murray-Dance-Studios diagram which indicates (one hopes) how to step down a stream of discourse from (purportedly) stable stone to stable stone until we've reached our agreed-upon picnic spot.

But closer to the flesh, very different sorts of choreography come into play. For the working mathematician, what impels and shapes the proof is the work of observation, intuition, exploration, and experimentation. For the teacher (depending on the teacher), tutor, or study group, the proof is meaningless without a motivating context and a sense of the mathematical objects being "handled," and those can only be conveyed by implication — by descriptions, metaphors, examples, images, gestures, and applications:

It is true, as you say, that mathematical concepts are defined by relational systems. But it would be an error to identify the items with the relational systems that are used to define them. I can define the triangle in many ways; however, no definition of the triangle is the same as the “item” triangle. There are many ways of defining the real line, but all these definitions define something else, something that is nevertheless distinct from the relations that are used in order to define it, and which is endowed with an identity of its own.We disagree with your statement that the ideal form of knowledge in theoretical mathematics is the theorem (and its proof). What we believe to be true is that the theorem (and its proof) is the ideal form of presentation of mathematics. It is, in our opinion, incorrect to identify the manner of presentation of mathematics with mathematics itself.

- Indiscrete Thoughts by Gian-Carlo Rota

But what are we studying when we are doing mathematics?A possible answer is this: we are studying ideas which can be handled as if they were real things. (P. Davis and R. Hersh call them “mental objects with reproducible properties”).

Each such idea must be rigid enough in order to keep its shape in any context it might be used. At the same time, each such idea must have a rich potential of making connections with other mathematical ideas. When an initial complex of ideas is formed (historically, or pedagogically), connections between them may acquire the status of mathematical objects as well, thus forming the first level of a great hierarchy of abstractions.

At the very base of this hierarchy are mental images of things themselves and ways of manipulating them.

"Mathematical Knowledge: Internal, Social and Cultural Aspects"

by Yu. I. Manin